Here are two demos of the latest in AI which have significantly impressed me, just from the past few days. I continuously feel with these demos that the majority of people don't understand the pace of development happening in the field, and regardless of your views on AI and the impact it will have, that is surely a bad thing.

Devin

Devin advertises itself as the first AI software engineer. Instead of just completing code or making suggestions, it works across a range of tools to complete coding tasks, which often require a range of actions such as downloading packages and repositories, writing code and testing outputs (to prevent a not-infrequent problem where LLMs provide code that doesn't work in practice)

The videos below are demos provided by Devin from their launch announcement, but from what I've seen, the demos are representative of the experience of using it

These aren't just cherrypicked demos. Devin is, in my experience, very impressive in practice. https://t.co/ZUJqDyLWmD

— Patrick Collison (@patrickc) March 12, 2024

Demo Videos

Using Devin in a production context

Completing Upwork jobs using Devin

Here is a longer demo of it in a real life scenario

I’m blown away by Devin.

— Mckay Wrigley (@mckaywrigley) March 13, 2024

Watch me use it for 27min.

It’s insane.

The era of AI agents has begun. pic.twitter.com/WjMa8TSc0P

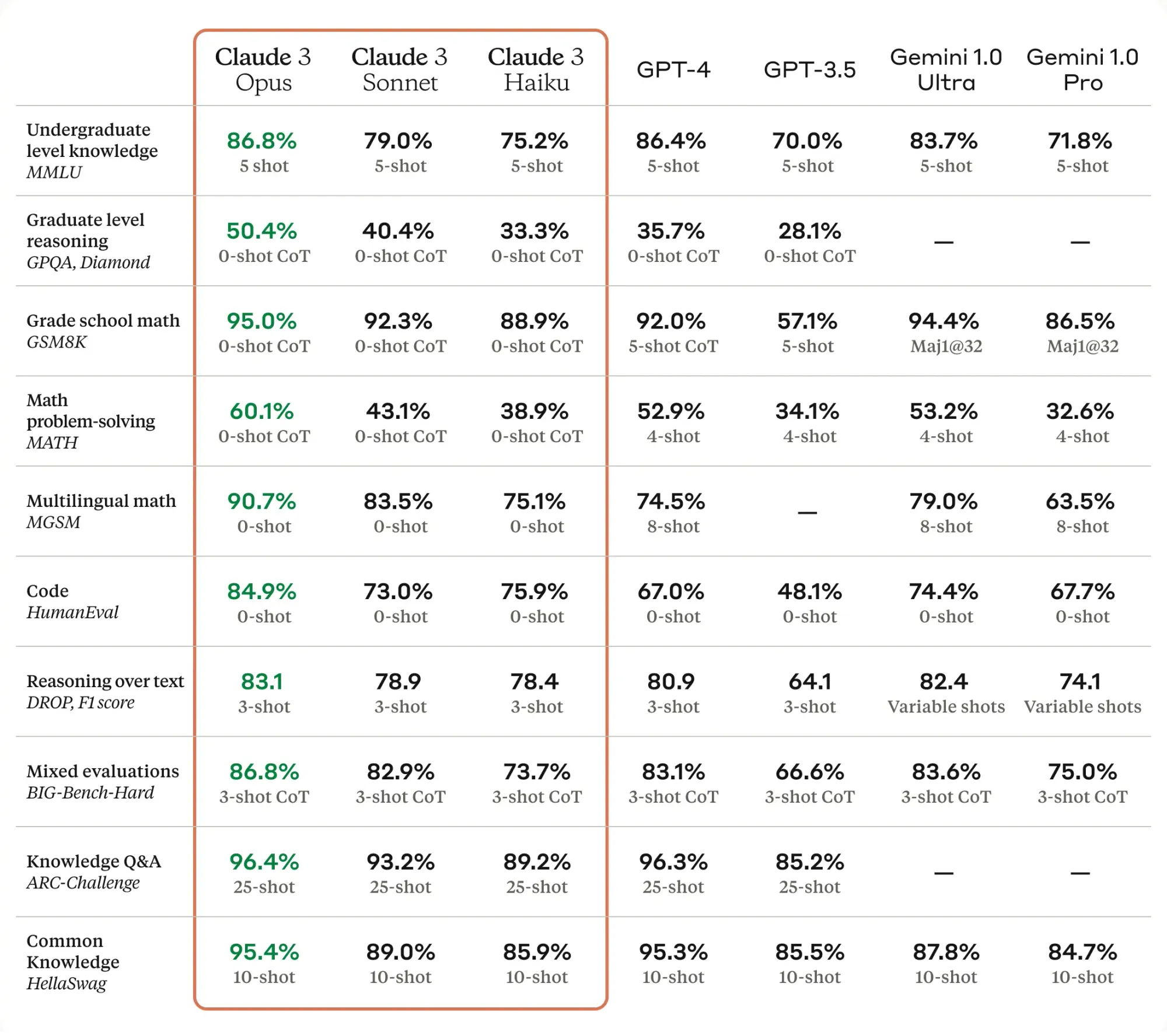

The reason people are particularly excited about Devin is because it performs significantly better on the SWE-bench than all other products thus far. The SWE-bench is a set of tasks to evaluate how effectively an LLM is able to solve real-world GitHub issues. It works by giving it 2,294 Issue-Pull Request pairs from 12 popular Python repositories (or a subset of those), and then evaluating its success by using unit tests to verify the behaviour of the codebase after the PR has been merged. This means that it evaluates the end result, and therefore is solution agnostic

In this set of tests, Devin ranked the highest, at 13.86% of tasks successfully completed (with the next closest being Claude 2 at 4.80% - Claude 3 has not yet been run on this evaluation, but is impressive in its own right on other tests - included below)

This is particularly interesting to me for a few reasons:

- This isn't a like-for-like comparison. Devin is a product built using LLMs in an agentic manner (connecting it with tools and running with LLMs many times over many iterations to resolve a problem), whereas presumably the other results are based on just using the model directly itself. These models would not have had access to the tools that Devin does, like a browser, terminal and more

- Devin isn't a model itself, it is an agent that utilises a model. Many people have suggested that Devin is using GPT-4 under the hood. This confirms my belief that the current generation of models have much more power than we currently realise, when used in this manner. With GPT-4 (and other similar models), the limiting factor of using these models in production is cost. A prediction to that end on the cost of Devin:

sanity check on @cognition_labs

— brian-machado-finetuned-7b (e/snack) (@sincethestudy) March 13, 2024

Per task, Devin is likely doing 10-20 maxed out GPT4-32k calls per minute, thats ~$2-$5/min, or $120-$300/hr depending on Input/Output token ratio.

my fellow waterloo interns will gladly outperform Devin for that hourly cost :)

Therefore, I'm building Edgar with a few things in mind:

- The priority should be accuracy and performance - lots of people have been impressed with demos of AI being used but struggle to find usage for it in their lives. Higher value examples naturally require longer to run through various steps

- Cost will decrease exponentially over the course of this year, making existing high accuracy products available at a market rate

- Speed will significantly increase for the current gen of models (GPT-4), so that chaining calls together in an agentic manner will be viable without waiting too long

Figure (in partnership with OpenAI)

Figure have been building humanoid robots, and only a few weeks ago they announced a partnership with OpenAI. This is the outcome of that partnership so far:

With OpenAI, Figure 01 can now have full conversations with people

— Figure (@Figure_robot) March 13, 2024

-OpenAI models provide high-level visual and language intelligence

-Figure neural networks deliver fast, low-level, dexterous robot actions

Everything in this video is a neural network: pic.twitter.com/OJzMjCv443

I think people underestimate the exponential curve we're on, and the impact it will have on industries in the coming years

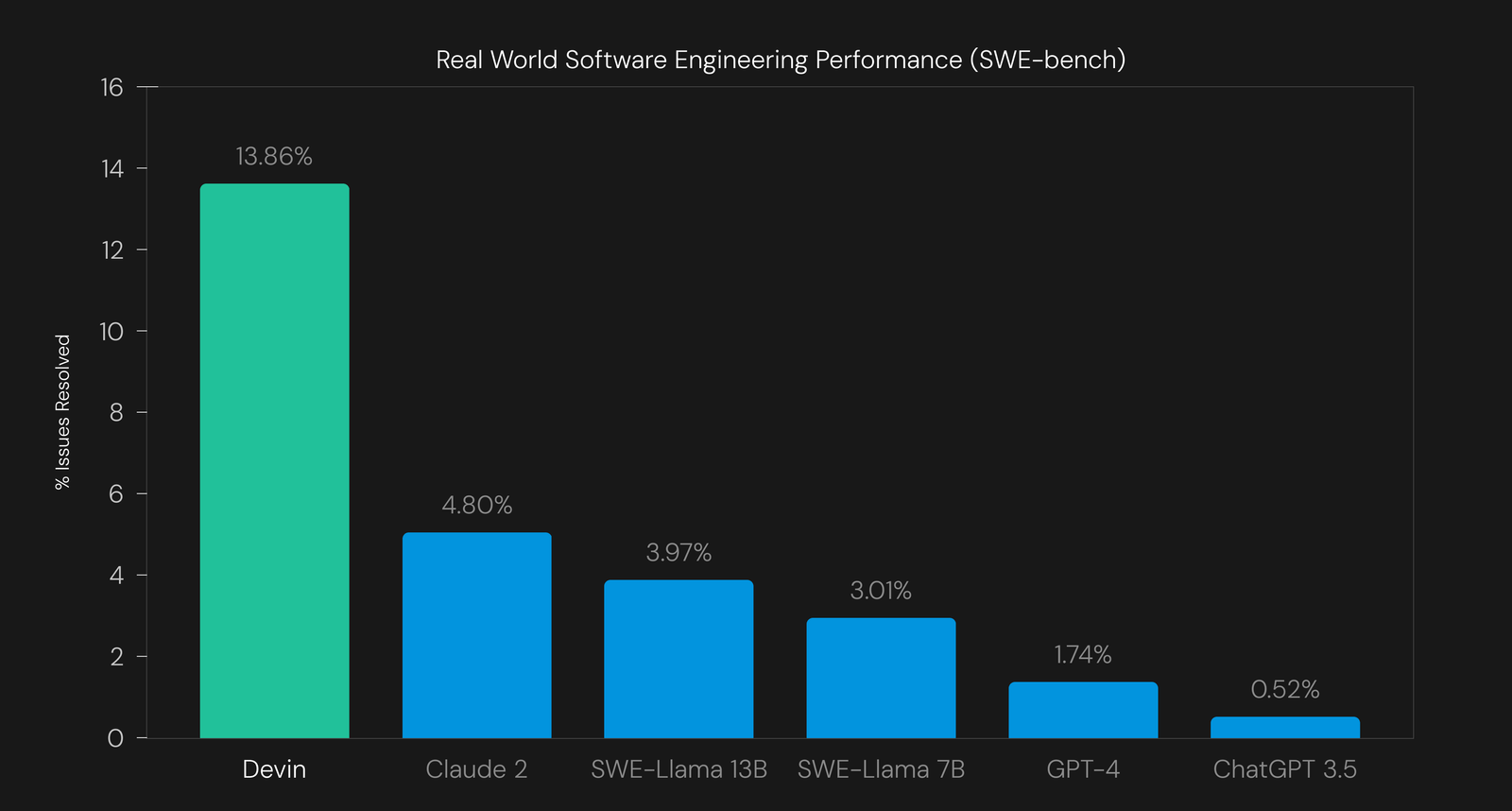

Claude 3 Benchmarking