The Phases of AI Integration

I believe it will take several years to see the current power of the models already available today, in commercial settings. And I believe we'll see products being built in order, matching the phases below.

Why is this an important point to consider? Because the nature of the UX/UI changes drastically when considering each of the different phases. Too many products out there are matching existing UX paradigms that no longer make sense in a post-AI world.

Phases of AI Integration

1. Copilot Assistance

What it is: Human and AI working in parallel.

The first step is copilot assistance, and for some tasks, this might end up being the optimum way to interact. This is where a human and artificial intelligence model are working together in parallel, at all times.

GitHub Copilot is the most famous example of this - for anyone unfamiliar with it, it is essentially an intelligence autocomplete for coding tasks. And it is true that it drastically reduces the time to complete coding tasks - even just the act of intelligently closing functions that you're writing adds up to a considerable amount of time when looking over the course of a week, month or year. But I find it often does much more than that, completing whole functions or components at a time.

The focus at this phase is to increase the productivity of a human doing an existing task or workflow.

2. Task-Level Collaboration

What it is: Collaboration on a specific task.

The next phase that I see is task-level collaboration. Let's imagine a task: you need to write a blog post. A simple example of this is a tool that asks you to provide the title of a blog post, it writes the blog post, and then you copy and paste that blog post into your CMS to prepare for publication (editing, tagging and posting).

3. Task Completion

What it is: Full automation of tasks, including determination, creation, and execution.

Let's imagine the same example: you want to write a blog post. The task isn't really writing a blog post based on a title, but it is determining what blog post to write based on an objective, writing it, and ultimately publishing it.

All of these steps are possible to be automated.

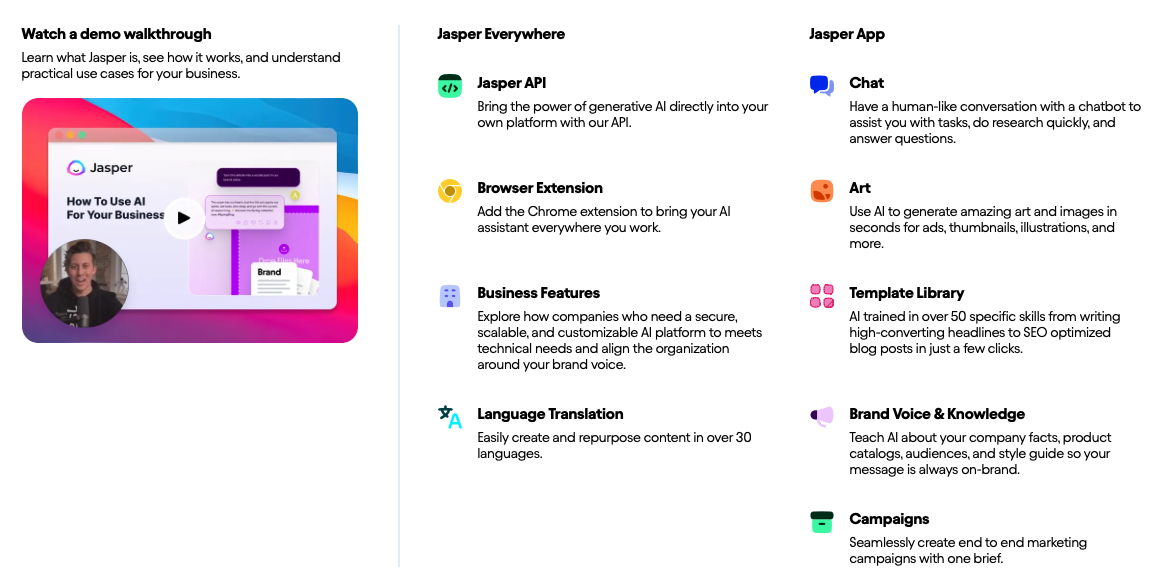

And to me, it indicates a lack of product vision that companies like AI ghostwriting tools are focused on building out many different products, instead of solving one problem properly. Just take this example product list:

4. Closed Loop Workflow Completion

What it is: Completion and ongoing maintenance of a task, like updating blog posts or codes.

Taking the above example further, the 'job' is not done once the blog post is live. If, for example, you're posting to a blog to optimise for search engine rankings, it requires monitoring and updating. You might also want to update the post with more up-to-date information, if you're writing about a topic that is likely to change.

Closed loop workflow completion essentially takes the idea of completing a task, and subsequently maintaining that task. Most work needs to be monitored, iterated on and updated. Code needs to be maintained with the latest packages, blog posts need to be updated with the latest information, and emails need to be followed up to, with the latest pitch that a company is using to sell their product. This step requires the product to be 'always on' - in other words, as opposed to just responding to an incoming request in realtime, it is scanning the horizon and analysing what needs to be done.

What is optimal here?

It might be read in the way I've written this in a hierarchy is because the solutions become better as you move upwards, and I think that could be directionally true. But there is a need for a range of solutions here: some people probably want to take a more hands-on approach to blog post writing, in which case they might prefer a GitHub Copilot-esque product, as opposed to having no input in the process. Others might want no involvement in the workflow, and just receive a report of activity every month, as if it were an employee reporting progress to them.

O Notation in Computer Science

My mind is drawn to the concept of 'Big O notation' in Computer Science.

A definition of Big O Notation

“Big O notation is a mathematical notation that describes the limiting behaviour of a function when the argument tends towards a particular value or infinity. It is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation.”

In a workflow using AI, the level of human involvement might vary depending on the complexity and specificity of the task. Simpler, more defined tasks may require minimal human oversight, akin to algorithms with O(1) complexity, where execution time is constant. As tasks become more nuanced and intricate, human interaction may increase logarithmically or linearly, corresponding to O(log n) or O(n) complexities. For highly individualised or complex activities, human involvement may grow even more rapidly, reflecting quadratic or exponential complexities like O(n2) or O(2n). This analogy helps illustrate the delicate balance between automation and human oversight, where choosing the right level of complexity in both AI and algorithms is vital to maintain efficiency without compromising quality.

The goal of AI products should be to move more and more tasks (of greater complexity) into O(1) and O(n) complexity, such that the task itself can be considered 'automated' (as opposed to the sub-tasks).

What's taking so long?

GPT-4 has been available since 14th March 2023. ChatGPT has been available for even longer, since 30th November 2022. And yet, we're primarily still in phases 1 and 2. I predict we'll see much more of 3 and 4 this year and next.